Member-only story

NLP: TF-IDF (Term Frequency-Inverse Document Frequency)

It is advisable to go through basics og Bag of Words before delving into TF-IDF:

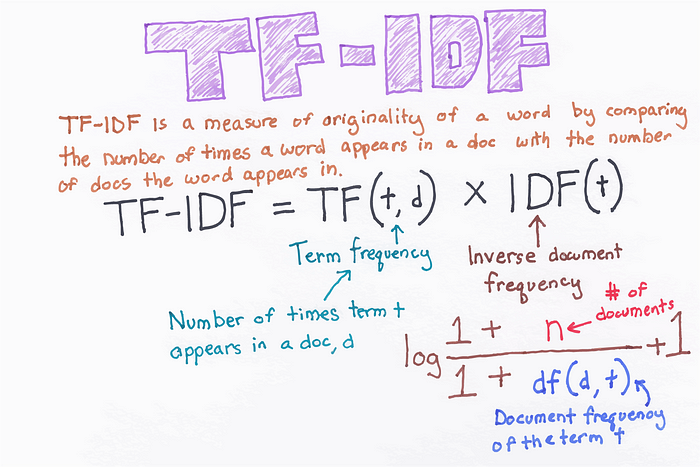

TF-IDF stands for Term Frequency-Inverse Document Frequency. It is a numerical representation technique commonly used in natural language processing (NLP) to reflect the importance of a word in a document within a collection or corpus.

It is basically a numerical statistic used in information retrieval and text mining to measure the importance of a term in a document within a larger collection or corpus.

TF-IDF takes into account both the frequency of a word within a document (term frequency) and the rarity of the word across the entire corpus (inverse document frequency).