Member-only story

XGBoost (eXtreme Gradient Boosting) is a powerful and efficient machine learning algorithm used for supervised learning tasks, such as regression and classification. It is based on the gradient boosting framework and is designed to handle large-scale and complex datasets.

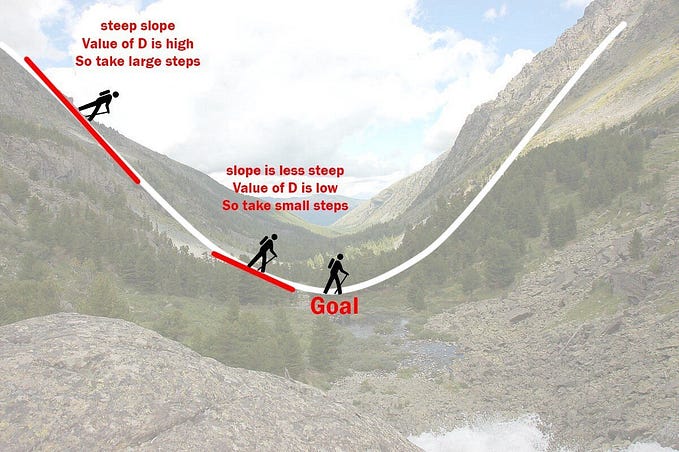

The XGBoost algorithm consists of a series of decision trees that are trained sequentially. Each new tree is trained to correct the errors of the previous tree, gradually improving the model’s performance. The algorithm is called “gradient boosting” because it minimizes a loss function by iteratively adding new models that minimize the negative gradient of the loss function.

Here is a more detailed explanation of how XGBoost works:

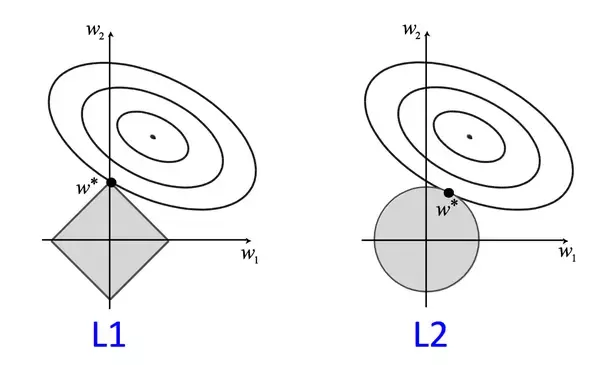

- Objective Function: The XGBoost algorithm optimizes a user-defined objective function, which measures the difference between the predicted values and the actual values. The objective function has two parts: the loss function and a regularization term. The loss function measures the difference between the predicted values and the actual values, while the regularization term penalizes complex models that are likely to overfit the data.

- Decision Trees: The XGBoost algorithm uses decision trees as the base learners. Each decision tree is a sequence of binary decisions that split the…